1.场景

我们在生产环境中需要对系统的各种日志进行采集、查询和分析。本例演示使用Fluentd进行日志采集,Elasticsearch进行日志存储,Kibana进行日志查询分析。

2.安装

2.1 创建dashboard用户

sa.yml:

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard

roleRef:

kind: ClusterRole

name: view

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system

2.2 创建PersistentVolume

创建PersistentVolume用作Elasticsearch存储所用的磁盘:

pv.yml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: elk-log-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /opt/data/kafka0

server: 192.168.1.140

readOnly: false

2.3 fluentd的配置(configmap)

若无此步的话,fluentd-es镜像会有下面的错误:

2018-03-07 08:35:18 +0000 [info]: adding filter pattern="kubernetes.**" type="kubernetes_metadata"

2018-03-07 08:35:19 +0000 [error]: config error file="/etc/td-agent/td-agent.conf" error="Invalid Kubernetes API v1 endpoint https://172.21.0.1:443/api: SSL_connect returned=1 errno=0 state=error: certificate verify failed"

2018-03-07 08:35:19 +0000 [info]: process finished code=256

所以需要配置configmap:

cm.yml:

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-conf

namespace: kube-system

data:

td-agent.conf: |

<match fluent.**>

type null

</match>

# Example:

# {"log":"[info:2016-02-16T16:04:05.930-08:00] Some log text here\n","stream":"stdout","time":"2016-02-17T00:04:05.931087621Z"}

<source>

type tail

path /var/log/containers/*.log

pos_file /var/log/es-containers.log.pos

time_format %Y-%m-%dT%H:%M:%S.%NZ

tag kubernetes.*

format json

read_from_head true

</source>

<filter kubernetes.**>

type kubernetes_metadata

verify_ssl false

</filter>

<source>

type tail

format syslog

path /var/log/messages

pos_file /var/log/messages.pos

tag system

</source>

<match **>

type elasticsearch

user "#{ENV['FLUENT_ELASTICSEARCH_USER']}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD']}"

log_level info

include_tag_key true

host elasticsearch-logging

port 9200

logstash_format true

# Set the chunk limit the same as for fluentd-gcp.

buffer_chunk_limit 2M

# Cap buffer memory usage to 2MiB/chunk * 32 chunks = 64 MiB

buffer_queue_limit 32

flush_interval 5s

# Never wait longer than 5 minutes between retries.

max_retry_wait 30

# Disable the limit on the number of retries (retry forever).

disable_retry_limit

# Use multiple threads for processing.

num_threads 8

</match>

2.4 整体配置

logging.yml:

apiVersion: v1

kind: ReplicationController

metadata:

name: elasticsearch-logging-v1

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

replicas: 2

selector:

k8s-app: elasticsearch-logging

version: v1

template:

metadata:

labels:

k8s-app: elasticsearch-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

serviceAccount: dashboard

containers:

- image: registry.cn-hangzhou.aliyuncs.com/google-containers/elasticsearch:v2.4.1-1

name: elasticsearch-logging

resources:

# need more cpu upon initialization, therefore burstable class

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: es-persistent-storage

mountPath: /data

env:

- name: "NAMESPACE"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: es-persistent-storage

persistentVolumeClaim:

claimName: elk-log

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: elk-log

namespace: kube-system

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

#selector:

# matchLabels:

# release: "stable"

# matchExpressions:

# - {key: environment, operator: In, values: [dev]}

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "Elasticsearch"

spec:

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch-logging

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: fluentd-es-v1.22

namespace: kube-system

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

version: v1.22

spec:

template:

metadata:

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

version: v1.22

# This annotation ensures that fluentd does not get evicted if the node

# supports critical pod annotation based priority scheme.

# Note that this does not guarantee admission on the nodes (#40573).

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key": "node.alpha.kubernetes.io/ismaster", "effect": "NoSchedule"}]'

spec:

serviceAccount: dashboard

containers:

- name: fluentd-es

image: registry.cn-hangzhou.aliyuncs.com/google-containers/fluentd-elasticsearch:1.22

command:

- '/bin/sh'

- '-c'

- '/usr/sbin/td-agent 2>&1 >> /var/log/fluentd.log'

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

- name: config-volume

mountPath: /etc/td-agent/

readOnly: true

#nodeSelector:

# alpha.kubernetes.io/fluentd-ds-ready: "true"

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config-volume

configMap:

name: fluentd-conf

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana-logging

template:

metadata:

labels:

k8s-app: kibana-logging

spec:

containers:

- name: kibana-logging

image: registry.cn-hangzhou.aliyuncs.com/google-containers/kibana:v4.6.1-1

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

requests:

cpu: 100m

env:

- name: "ELASTICSEARCH_URL"

value: "http://elasticsearch-logging:9200"

- name: "KIBANA_BASE_URL"

value: "/api/v1/proxy/namespaces/kube-system/services/kibana-logging"

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "Kibana"

spec:

ports:

- port: 5601

protocol: TCP

targetPort: ui

selector:

k8s-app: kibana-logging

type: ClusterIP

2.5 安装

kubectl apply -f sa.yml

kubectl apply -f cm.yml

kubectl apply -f pv.yml

kubectl apply -f logging.yml

2.6 验证

-

上步完成后要等待相当长的时间,请耐心等待。

-

查看

kibana与Elasticsearch访问地址:kubectl cluster-infoKubernetes master is running at https://Master-IP:6443 Elasticsearch is running at https://Master-IP:6443/api/v1/namespaces/kube-system/services/elasticsearch-logging/proxy Heapster is running at https://Master-IP:6443/api/v1/namespaces/kube-system/services/heapster/proxy Kibana is running at https://Master-IP:6443/api/v1/namespaces/kube-system/services/kibana-logging/proxy KubeDNS is running at https://Master-IP:6443/api/v1/namespaces/kube-system/services/kube-dns/proxy monitoring-influxdb is running at https://Master-IP:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy -

启动客户端代理

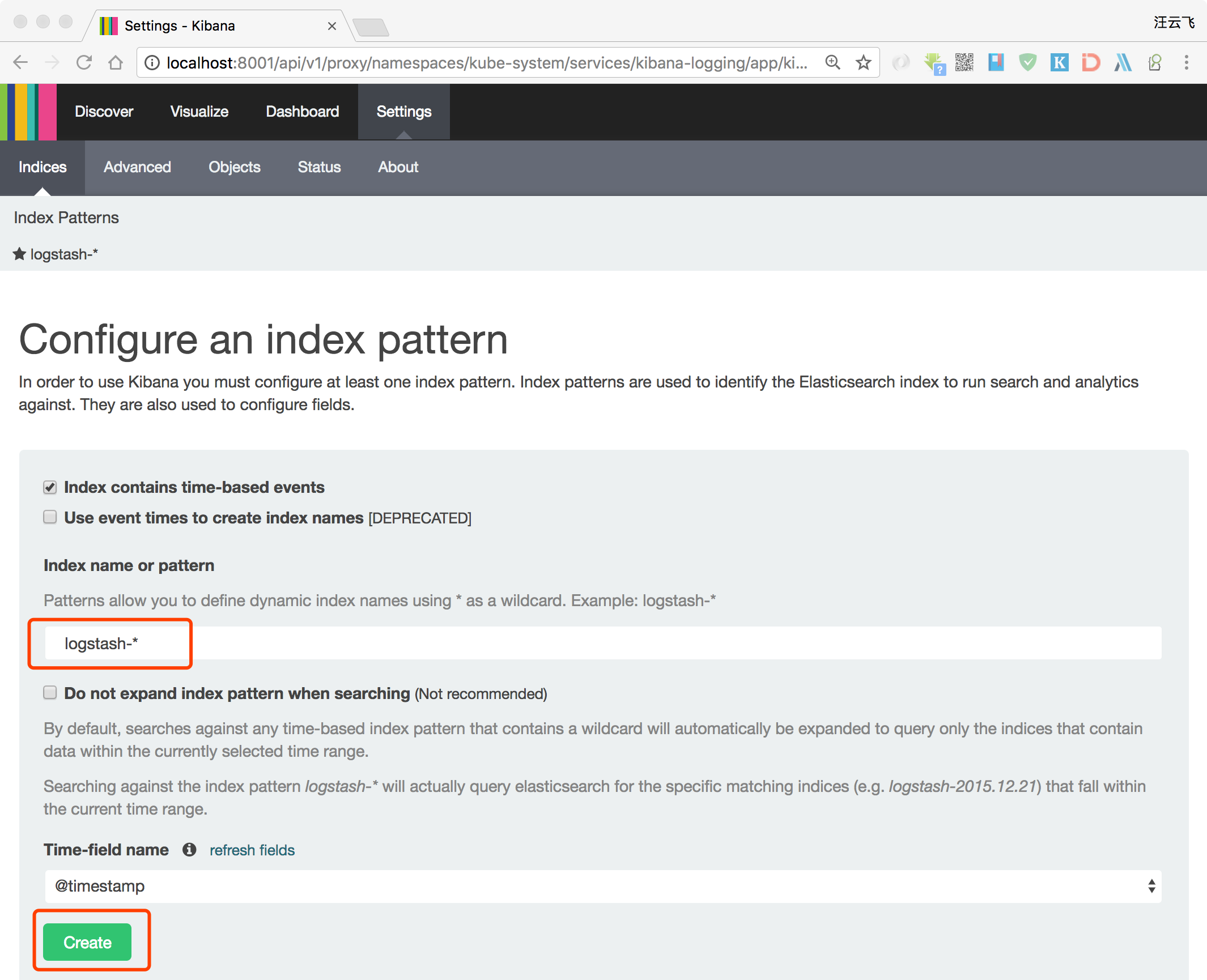

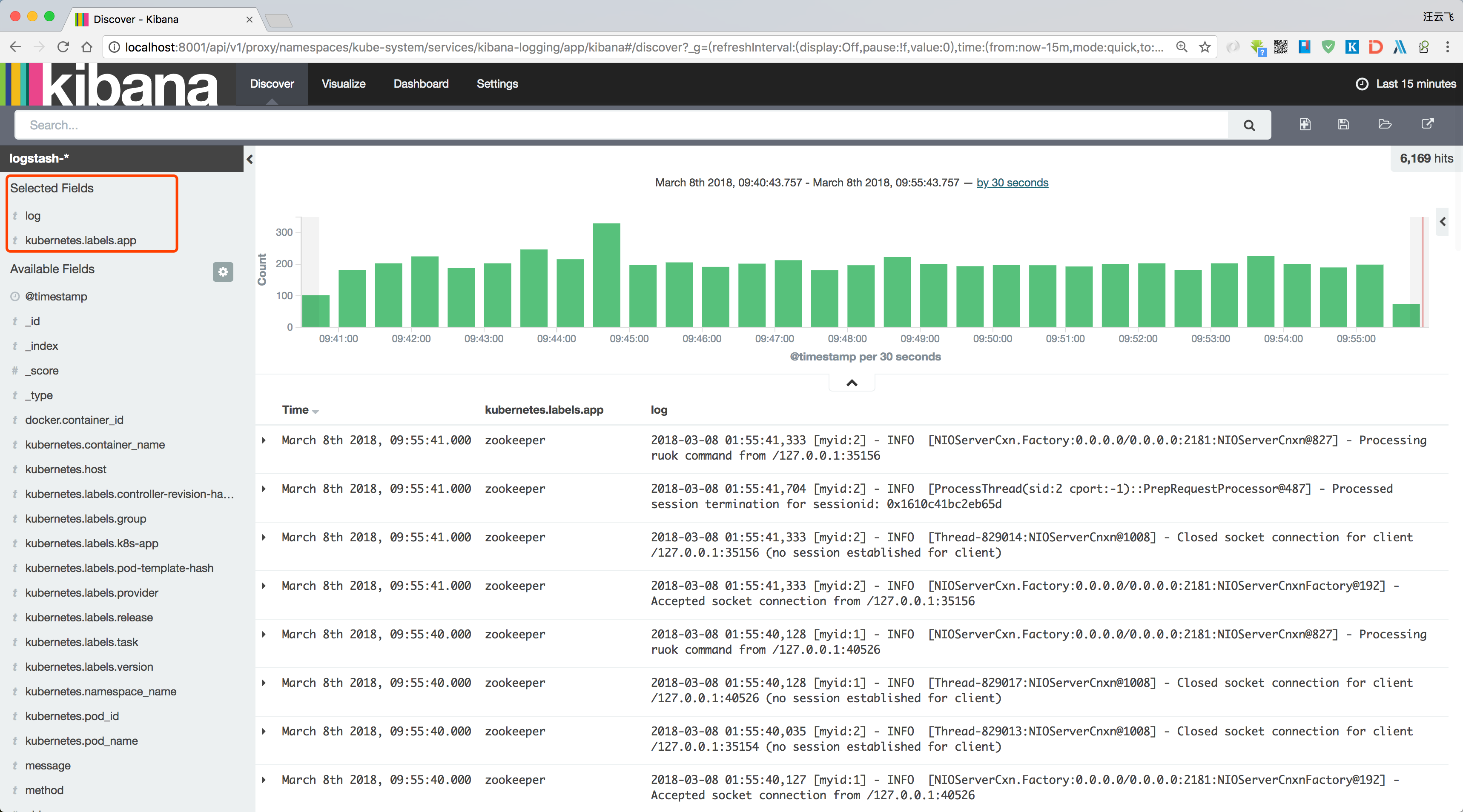

kubectl proxy,访问:http://localhost:8001/api/v1/namespaces/kube-system/services/kibana-logging/proxy

相关推荐

Kubernetes监控与日志.pdf kubernetes容器云平台实践-李志伟v1.0.pdf Kubernetes生态系统现状报告.pdf Kubernetes下API网关的微服务实践 长虹集团-李玮演讲PPT.pdf Kubernetes与EcOS的碰撞结合 成都精灵云-张行才...

基于SpringCloud+Oauth2+MyBatis+Elasticsearch+Docker+Kubernetes的商城系统项目源码+数据 核心功能: 平台管理端:商品管理、订单管理、会员管理、促销管理、店铺管理、运营管理、统计分析、系统设置、日志系统。 ...

1.kubernetes 初探 简要介绍K8s平台、主要功能和社区开发情况,并通过分析企业云平台需求...本讲在前九讲的基础上总结了目前企业应用Kubernetes所存在的各种问题,介绍了IBM基于Kubernetes搭建的下一代私有云平台ICp

开发者可以基于Kubernetes来构建自己的云原生应用与平台,Kubernetes成为了构建平台的平台。云服务集成阿里云容器服务Kubernetes目前已经和四款监控云服务进行了打通,分别是SLS(日志服务)、ARMS(应用性能监控)...

Cluster.dev-在几分钟内基于Kubernetes的开发环境 Cluster.dev是一个开源系统,以GitHub Action或Docker Image的形式提供,用于通过GitOps方法创建和管理具有简单清单的Kubernetes集群。 专为不想浪费时间来配置...

Kubiquity 是一个实时的 Kubernetes 错误监控工具。 Kubiquity 作为 Electron 应用程序在本地运行,并结合了 Prometheus 指标查询,了解集群的 CPU 和节点内存使用情况。 预习 目录 预习 目录 安装 使用要求 计划...

监控与日志:为了确保系统的稳定运行,该系统可能集成了监控和日志服务,如Prometheus和ELK Stack,帮助开发者及时发现并解决问题。总之,这个资源为旅行社提供了一个全面的解决方案,从预订到支付,再到客户服务,...

进行监控和日志记录。 动态配置。 在 GKE 上使用 Kubernetes 和/或 Kubernetes 的优势。 ###鸟瞰图 ###Environment Isolation 目前,staging 环境与生产环境完全隔离,使用 kubernetes 命名空间进行 pod 级隔离 ...

本科毕设_基于spring-boot+java实现的一个云笔记系统源码+项目说明.7z ...Grafana、Prometheus、Loki 作为集群指标与日志收集的监控平台 使用 GitHub Actions 与 GitHub Packages 实现持续集成与持续部署

基于Kubernetes的在离线弹性计算优化 基于容器构建一栈式微服务系统 架构师所需的硬实力和软技能 计算引擎云原生架构实践 京东零售云赋能企业数字化转型 京东云分布式存储ZBS架构演进 快手大规模在离线混部平台的...

基于Kubernetes的互联网ingress实践 基础运维平台实践 机器学习在大规模服务器治理复杂场景的实践 国际环境下的SRE体系实践 构建微服务下的性能监控 服务器的第一道防线——堡垒机的前世今生 风控系统在容器化时代的...

新思路打造移动端个案综合日志分析系统 网站性能优化 数据库运维发展与实践 容器化交付实践 全链路监控系统(鹰眼)的技术实践 平台自动化运维实践 监控报警平台的设计和演化 基于k8s开发高可靠数据库服务的实践 ...

基于K8S自动化编排技术系统设计与实现 针对于当前市场中企业对应用软件搭建部署的需求,针对需求进行设计开发的多个用户可以使用...安装完成后需要可以访问监控查看日志的功能。提高企业对应用的部署发布,提高了效率。

SkyWalking :一个APM(应用程序性能监视器)系统,专门为微服务,云原生和基于容器(Docker,Kubernetes,Mesos)的体系结构而设计。 抽象 SkyWalking是一个开源APM系统,包括对Cloud Native体系结构中的分布式...

[]技术框架: Sprint Boot码头工人Kubernetes 动物园管理员Redis 达博svnkit 杰施MySQL的ui安装教程创建数据库,MySql 5.6执行数据库建表脚本与初始化数据脚本,脚本在dbscript /目录下使用Maven打包项目,打包好后...

基于角色的访问控制RBAC(基于角色的访问控制)+ LDAP完全管理 配置文件加密(密码,AK),数据库敏感分区加密 代码即文档,全接口API 前一级分离,支持跨域部署 核心功能(自动化配置) 多云主机管理精英,EC2,CVM...

部署GlusterFS分布式文件系统提高深度学习模型、数据与日志文件的读取速率。同时利用Prometheus框架丰富容器云集群的监控指标,保证深度学习模型的训练效果。系统设计完成后,通过手势识别项目验证了平台的有效性。...

* 分布式日志系统:ELK(ElasticSearch + Logstash + Kibana)(后期考虑加入) * 反向代理负载均衡:Nginx * CDN服务器:基于Nginx搭建 前端主要技术栈 * 前端框架:Bootstrap + jQuery * 前端模板:AdminLTE ...

覆盖应用开发、应用交付、上线运维等环节,包括代码的管理、持续集成、自动化测试、交付物管理、应用托管、中间件服务、自动化运维、监控报警、日志处理等,本次分享主要介绍基于容器技术构建PaaS平台所采用的相关...